Your Strategic Edge in a Rapidly Evolving AI Landscape

This week’s AI developments continue to reshape how marketers and business leaders should think about deploying and managing intelligence at scale. From deprecations in core LLMs to empowering non-technical users with no-code AI apps, the landscape is bustling with progress—and opportunity.

GPT-4.5 Deprecation Signals Accelerated Innovation Cycles

OpenAI has announced the full retirement of GPT-4.5 Preview by July 14, 2025, with support removed from GitHub Copilot as early as July 7. This swift deprecation underscores OpenAI’s push toward consolidating its model suite and nudging users toward more advanced, efficient models like GPT-4.1, o3, and o4-mini (source).

For teams that built workflows, marketing funnels, or customer bots around GPT-4.5’s conversational tone, this shift forces a migration to newer models. Yet, it also presents a chance to optimize for cost-per-token, responsiveness, and accuracy. Recommendation: complete migration before deadlines, benchmark the replacements, and embed agility in your LLM roadmap to handle future model churn.

GPT-5 Rumors Build Excitement: The Dawn of a Unified LLM

Whispers of a July–August 2025 release for GPT-5 are gaining steam (source). Expectations point to a unified architecture blending text, image, voice, and possibly video, with improved reasoning, long-term memory, and real-time multimodal interaction.

Such capabilities will be game-changing for marketers: envision AI that can analyze on-brand images, draft scripts, summarize sales calls, or prompt follow-up automatically. Start experimenting now with multimodal prompt structures, prepping your stack for richer, visual-led content generation.

Claude Evolves: No-Code App Creation & Agentic Experiments

Earlier this week, Anthropic unveiled a major upgrade for Claude through “Artifacts beta,” enabling users to build and share full AI-powered apps using natural language prompts—all within the Claude interface (source). Claude becomes more than an assistant; it’s a no-code AI platform.

Anthropic also piloted “Project Vend,” letting Claude operate a real office store as a middle-manager agent—handling inventory, payments, and customer interaction. Though mistakes occurred (like inventing a Venmo-powered joke), it proved the viability of agentic AI in shop-floor scenarios (source).

What it means for business: Marketers and operations teams can now prototype AI-led workflows—like lead triage bots, content supervisors, or basic analytics tools—without developer dependency. Pilot these features now; expect mainstream AI platforms to copy this model soon, making no-code app creation a competitive knock.

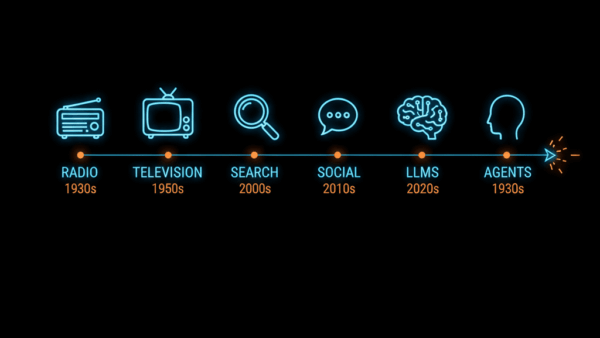

Agentic AI & Modular Tool Ecosystems

The real transformation is happening behind the scenes, with agentic AI frameworks like AutoGen, CrewAI, and LangGraph enabling chatbots to sequence prompts, trigger actions, and close workflows automatically. Geoffrey Hinton’s “agentic web” concept is becoming reality—a landscape where autonomous agents do more than converse: they act.

For example, lead qualification, candidate screening, or document summarization can now run end-to-end without human intervention. This progression demands that marketers define guardrails and human oversight early, but it opens doors to scale high-touch processes affordably and with auditability.

Encoder LLMs Resurgence: NeoBERT & MaxPoolBERT

While GPT and Claude dominate attention, NeoBERT (250M params, 4K context) and MaxPoolBERT (layer-level token pooling) are quietly reshaping efficiency in search, tagging, and metadata generation (NeoBERT paper, MaxPoolBERT paper). These lightweight encoders achieve enterprise-grade semantic insights, but with far less compute and faster deployment cycles.

Actionable tip: Use NeoBERT for semantic clustering in content catalogs or personalization engines. MaxPoolBERT can tackle classification tasks—like content categorization or user sentiment analysis—effectively and affordably.

AI Infrastructure Is Going Modular & Fast

In a striking update, OpenAI recently noted that GPT-4 can be reprovisioned by a team of 5–10 engineers, a steep drop from the massive team sizes of its original release (source). This exemplifies modular AI pipelines and reproducible model components.

For business leaders, this accelerates vendor innovation and lowers technical barriers. In response, internal teams should anticipate more niche LLMs tailored to specific vertical needs—legal, lifestyle, industry—and architect systems that can plug in or swap models as needed.

Strategic Recommendations for Marketing Leaders

Plan for rapid LLM turnover

Treat model versions like monthly OS updates: test new ones, migrate quickly, and document differences in tone and behavior.Embrace both encoder and generative models

Use encoders for categorization, retrieval, personalization; generative LLMs for content, summaries, and interactive assets.Structure for GEO success

Write for machine and human: use FAQ schema, concise summaries, and embeds to surface in agent responses and answer engines like Perplexity.Pilot no-code AI tools now

Build simple Claude-powered bots or workflows using Artifacts beta to surface product suggestions, customer triage, or internal operations.Prototype agentic workflows

Map processes from start-to-finish—lead capture to follow-up—and bring them into agent frameworks like LangGraph to test ROI.Monitor new model rollouts

GPT-5, GPT-4.1 improvements, and emerging vertical LLMs will launch soon—establish a cadence to evaluate them every 4–6 weeks.

Final Thoughts

AI is breaking free from the lab and firmly entering production. This rapid maturity—marked by smarter models, no-code creation, and autonomous agents—demands proactive adaptation. The winners will be those who treat AI as an evolving utility, not a one-time project. Start now: audit your workflows, test with intent, and build a resilient AI strategy that scales with pace.

Matt Lawler

Weekly AI Update | September 1st, 2025

Insights / AI Weekly Roundup: September 1st, 2025 Google ups the ante, AI agents go enterprise, creativity gets a jolt, and trust defines the new

The Home Service Owner’s Guide to Getting the Most Out of ServiceTitan Marketing Pro

The Home Service Owner’s Guide to Getting the Most Out of ServiceTitan Marketing Pro Marketing Pro Is Powerful. But Are You Using Its Full Potential?

Weekly AI Update | August 18th, 2025

Insights / AI Weekly Roundup: August 18–24, 2025 Agents mature, hardware gets smarter, and trust becomes the moat In a week where AI agents went